A Study of Variable-Role-based Feature Enrichment in Neural Models of Code

Aftab Hussain, Md Rafiqul Islam Rabin, Bowen Xu, David Lo, Mohammad Amin Alipour

University of Houston

Carnegie Mellon University

article Accepted at The 1st International Workshop on Interpretability and Robustness in Neural Software Engineering (InteNSE 2023), co-located with the 45th International Conference on Software Engineering (ICSE 2023), Melbourne, Australia

arrow_backReturn to Safe and Explainable AI Projects

University of Houston

Carnegie Mellon University

article Accepted at The 1st International Workshop on Interpretability and Robustness in Neural Software Engineering (InteNSE 2023), co-located with the 45th International Conference on Software Engineering (ICSE 2023), Melbourne, Australia

arrow_backReturn to Safe and Explainable AI Projects

Understanding variable roles in code has been found to be helpful by students in learning programming – could variable roles help deep neural models in performing coding tasks? We do an exploratory study:

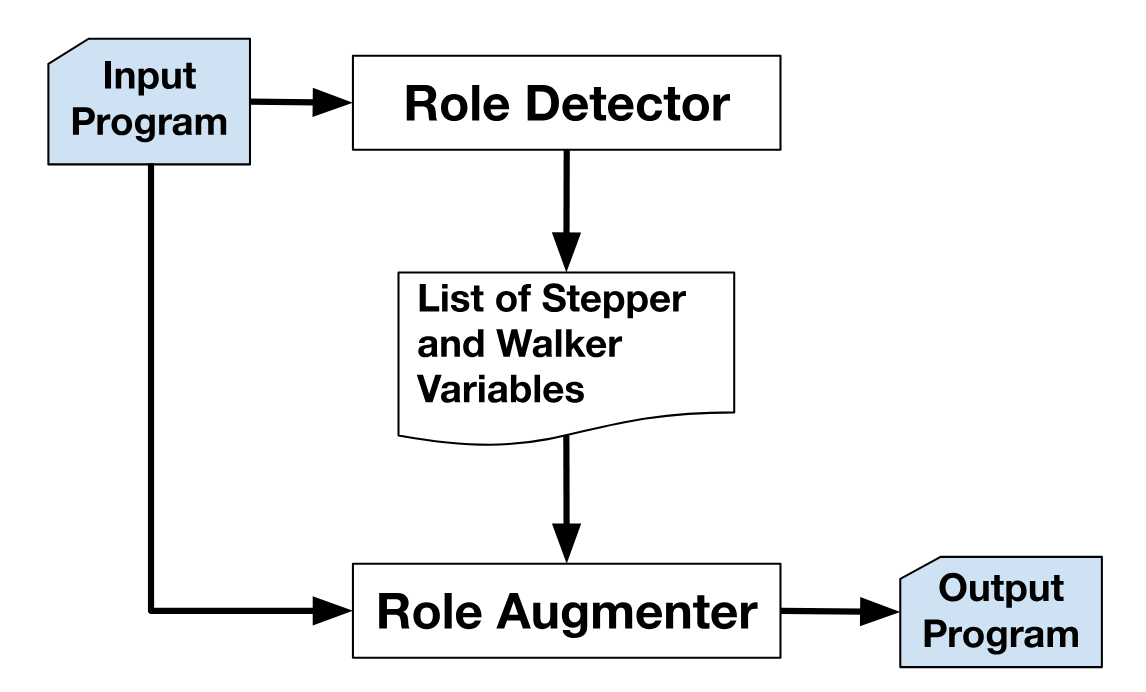

- Analyzed the impact of an unsupervised feature enrichment approach based on variable roles on the performance of neural models of code.

- Investigated the potential benefits of Sajaniemi et al.’s programming education concept of variable roles, in enhancing neural models of code.

- Conducted the first study to analyze how variable role enrichment affects the training of the Code2Seq model.

- Enriched a source code dataset by annotating the roles of individual variables, examining its impact on model training.

- Identified challenges and opportunities in feature enrichment for neural code intelligence models.